Polynomial multiplication is widely used in zero-knowledge proofs and mathematical cryptography. But the brute force or traditional approach for multiplying polynomials runs in , which is fine for small inputs but becomes quite expensive as the degree of the polynomial increases. This article takes a detailed look at polynomial multiplication in order to explore ways of making it faster.

- We begin with a review of the schoolbook/traditional polynomial arithmetic

- Followed by a study of different forms of representation of polynomials

- We examine and compare polynomial arithmetic in these different forms

- Finally, we look at how these forms can potentially speed up polynomial multiplication - and how they form the grounds for an algorithm called Number Theoretic Transform (NTT)

Polynomial Multiplication - Traditional approach

Consider two polynomials and of degree each:

Multiplying these two polynomials using the simple way of distribution of multiplication over addition takes . Here, each term of is multiplied with each term of :

For example,

let ,

and .

Then,

When programmed, this is implemented in the form of nested loops.

# Let A be array representing the coefficients of p1(x)

A = [a0, a1, ..., an]

# Let B be array representing the coefficients of p2(x)

B = [b0, b1, ..., bn]

# Let C be the array storing coefficients of p1(x).p2(x)

function multiply_polynomials(A, B):

n = len(A)

m = len(B)

C = array of zeros of length (n + m - 1)

for i from 0 to n - 1:

for j from 0 to m - 1:

C[i + j] += A[i] * B[j]

return C

You would get the result as:

For each iterations of the outer loop, the inner loop executes n times (assuming equal degree ), thus giving n times n, i.e. runtime of .

We now want to see if we can optimize this and do better. Or simply, is there a way to make polynomial multiplication faster?

Ways to represent a Polynomial

There are two ways in which we can represent a polynomial: coefficient form and point form.

Coefficient Form

Polynomials are usually expressed in what is called the monomial basis, or the coefficient form, meaning it’s written as a linear combination of powers of the variable.

For instance, a polynomial of degree , when expressed as

is expressed in the monomial basis, since it is using as the basis for its coefficients, which are

In this representation, the coefficients of can be written as a vector or an array, like , where the first element corresponds to the constant term or coefficient of , while the last element corresponds to the coefficient of .

You should note that the earlier method of distributive multiplication we looked at (runtime ) was applied over the coefficient form of polynomials.

Point (or Value) Form

The point (or value) form representation is based on the fact that every polynomial of degree can be represented by a set of distinct points that lie on it.

For example, consider a quadratic polynomial (degree ):

Now take any (because ) lying on this curve, say , and . We can say that these points represent the given polynomial. Alternatively, if we are just given these points, it is possible to recover the polynomial from that information. Why does this work? Or how are we able to equivalently represent a degree polynomial with points?

This is because:

For every set of distinct points, there exists a unique lowest degree polynomial of degree at most that passes through all of them.

This lowest degree polynomial is called the Lagrange Polynomial.

For example,

given two points and , there exists a unique polynomial of degree (a line) passing through these points:

Similarly,

given three points , , and , the Lagrange polynomial of degree passing through these points is:

How this special polynomial is calculated given a set of points is something that is discussed in this article on Lagrange interpolation.

Uniqueness of the Lagrange Polynomial

You must note that for a set of points, there are multiple polynomials of a given degree that pass through all of them. But only the lowest degree polynomial is unique.

For instance, in the above example of points and , there exist many polynomials passing through these two points:

and are two of many polynomials of degree (quadratic) that pass through these two points.

Similarly, if you consider degree , two example polynomials passing through points and are and .

But for the lowest degree, here , there exists only one polynomial of the lowest degree, and that is . It is unique, and there is no other polynomial of degree that can pass through these two given points.

How is this lowest degree determined?

For every set of distinct points, there is a unique polynomial of degree at most that passes through them. The degree of the unique polynomial is less than if some of the points are collinear or lie on a lower degree polynomial. Therefore, we use the term “at most ” to cover the case where the degree is exactly , as well as cases where the degree is lower.

For example,

given the points and , the polynomial of lowest degree passing through them is . This is because the points are collinear, i.e. they lie on a line. One can check that the slope between any pair of them is the same:

Therefore, the lowest degree is , and is the unique Lagrange polynomial.

Similarly,

Given five points, , we find that all of them lie on a parabola with the equation

This is a case where all the given points lie on a lower degree polynomial, here degree , and thus the Lagrange polynomial has degree , which is less than (Here, ).

Refer to the Appendix at the end for proof of uniqueness of the lowest degree polynomial.

Conversion Between Coefficient Form and Point Form

Since coefficient form and point form are equivalent, we can readily convert between them as we show now.

Interpolation (Point Form → Coefficient Form)

The conversion from point form to coefficient form, called interpolation, is calculating the polynomial of lowest degree which passes through all the given points. One of the most well-known methods it is done is using Lagrange Interpolation, that we mentioned previously. If you are unfamiliar with it, you may go through this article.

In short, given a set of distinct points

we can find the unique lowest degree polynomial of degree at most using the formula for Lagrange interpolation, such that:

You should keep in mind that the runtime of Lagrange interpolation is .

Evaluation (Coefficient Form → Point Form)

The conversion from coefficient form to point form, called evaluation, is evaluating the polynomial at values of to obtain the corresponding values of , and thus a set of points, which represent the polynomial. One common way this can be done is by using Horner’s rule (to be discussed in detail in a future article).

In short, given coefficients of a polynomial and a value , Horner’s Method evaluates as follows:

This method factors out common powers of , one at a time until all the terms are processed. Let us look at an example to understand it better.

Given the polynomial and a value , we will review how Horner’s rule evaluates .

We can rewrite as follows (as shown in the generalized expression above):

Substituting :

Observe how the steps involve alternating multiplications and additions. Steps 1, 3 and 5 of the above calculation are multiplications, while steps 2, 4 and 6 are additions. In total, there are multiplications and additions (here ), giving a total runtime of . This is how Horner’s rule evaluates a polynomial of degree at a given value of in

Therefore, evaluating the polynomial at distinct -values - converting from coefficient to point form using this rule - takes times , i.e. .

Coefficient form VS Point form

We said that coefficient form and point form of a polynomial are equivalent, and one can be converted to the other. That is, there is no difference in the final results of addition and multiplication when done in either form. Let us examine this, with an example of addition first.

Addition in coefficient form

Consider two polynomials given in coefficient form,

Or their respective arrays of coefficients:

Now, adding the two polynomials is simply adding the two arrays element-wise, and the resultant coefficient array represents the final polynomial. Let’s verify this:

Or simply,

For two polynomials of degree , we perform additions to get the sum’s representation. Therefore, the runtime of addition in coefficient form is .

Addition in point form

Consider the same two polynomials,

First, we need to convert them from coefficient form to point form. Since the degree of both polynomials is , the degree of their sum will be at most as well. Therefore, we need three points to represent the sum (degree plus one: ), which requires evaluations each of and .

Let us evaluate and at to get our points.

Note: We are just choosing for simplicity. You could pick any other points for evaluation.

Now, adding the two polynomials requires adding the corresponding evaluations element-wise, that is:

These three points and give us the point representation of the sum. Let us verify whether they satisfy the polynomial we calculated earlier:

Therefore, you see that addition in both forms gives the same result, or the same polynomial, just represented in different ways.

In point form addition, for two polynomials of degree , there are points representing each of them, and thus element-wise additions that we perform to get the sum’s representative points. Therefore, the runtime of addition in point form is .

Now, let us also look at multiplication closely.

Multiplication in coefficient form

Consider two polynomials given in coefficient form:

Or their respective coefficient arrays:

and

Multiplying them using the distributive way discussed earlier gives:

The resulting polynomial is , represented by the coefficient array . The distributive method of coefficient form multiplication takes , as we saw at the start of this article.

Multiplication in point form

We now consider the same polynomials and convert them to their point forms.

Since both polynomials have degree , their product will have a degree of at most , meaning we need points to represent it, which requires 3 evaluations of each of and .

So, let’s evaluate and at .

Now, to get the points that represent their product, we multiply the evaluations element-wise:

So the three points and give us the point representation of the resultant product.

Let’s verify if they satisfy the polynomial product we got earlier:

Therefore, you can see that multiplication in both forms gives the same polynomial, just represented in different ways.

In summary, for two polynomials and of degree , their product will have a degree of at most , meaning we need points to represent it. Thus, we perform evaluations for each polynomial at common values of to convert them into point form:

We then perform point form multiplication by multiplying these two sets element-wise, which takes multiplications, i.e., runtime of .

This gives us the points which represent the product :

The amazing thing to note here is that, while addition in both coefficient and point form takes the same time , multiplication in point form is significantly faster than in coefficient form. In point form, we perform element-wise multiplications, giving a runtime of , which is way better than the required for coefficient form multiplication!

However, there is still an issue- we haven’t considered the overhead of converting to the point form and vice versa.

So, lets look at the complete process of multiplication in point form, which involves three steps:

- Conversion of coefficient to point form

We evaluate the two polynomials of degree , to be multiplied, at values of , to get a set of evaluations each. This takes using Horner’s Method. - Element-wise multiplication in point form representation

We multiply these two sets element-wise to get evaluations that gives the point form representation of their product. This takes . - Conversion of point to coefficient form

We calculate the unique lowest degree polynomial (coefficient form) that passes through all the resultant points. This takes using Lagrange interpolation.

Therefore, the overall runtime for the steps above is:

which is no better than where we started from. Thus, we need to explore whether any optimizations can make this process faster.

Optimizing conversion

The key point to keep in mind is that multiplication in coefficient form takes , whereas multiplication in point form (element-wise) takes . Therefore, if we can find a way to convert coefficient form to point form and vice versa (steps 1 and 3 mentioned above) faster than , we can optimize multiplication to run in sub-quadratic time.

It is important to note that we cannot optimize polynomial addition, because addition in both coefficient form and point form runs in each.

So now let us brainstorm a few ways we might make the conversion from coefficient to point form faster.

What if we knew a point whose evaluation could give us the values of several related points, saving us from repeated calculations?

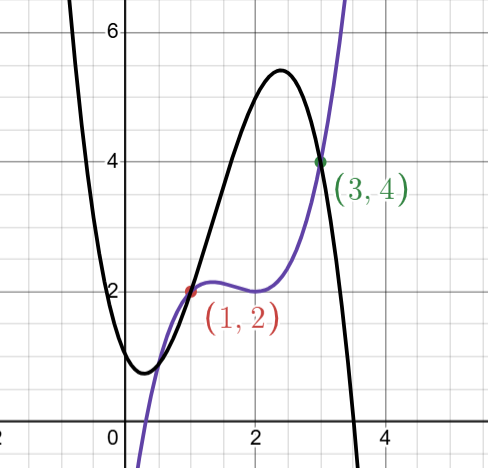

For example, if we had a polynomial with a symmetric graph, evaluating one point would tell us the evaluation for its corresponding symmetric point as well.

Consider the polynomial .

Observe how,

Or, more simply, observe how for all ,

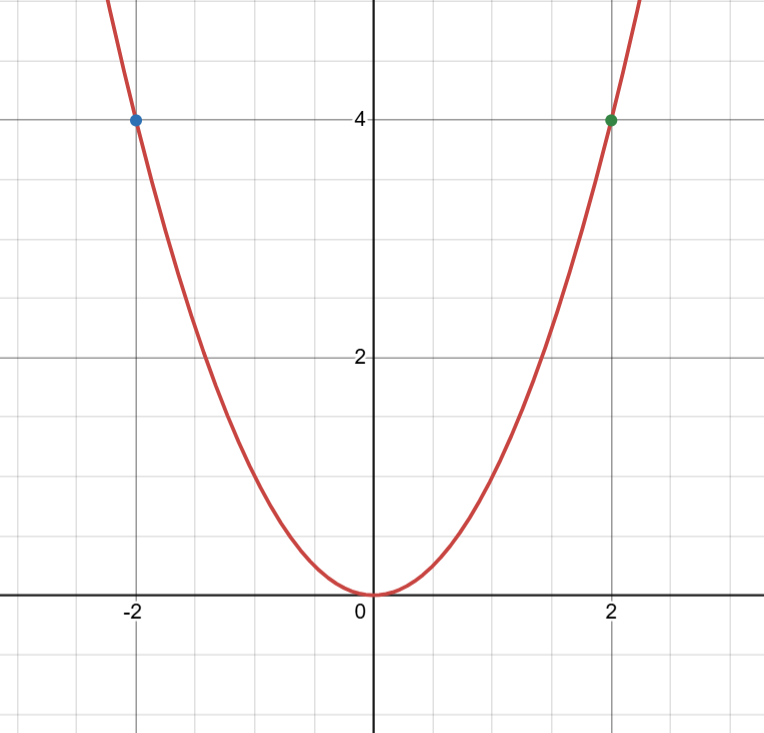

This is not just true for , but generalizes to all polynomials that contain only even powered coefficients, which are also called as even polynomials.

For example, consider the even polynomial (containing only terms with even powers of :

In the graph above, it is easy to observe that

Visually speaking, the graphs of even polynomials are mirrored about the -axis, and they evaluate to the same for both positive and negative values of any given .

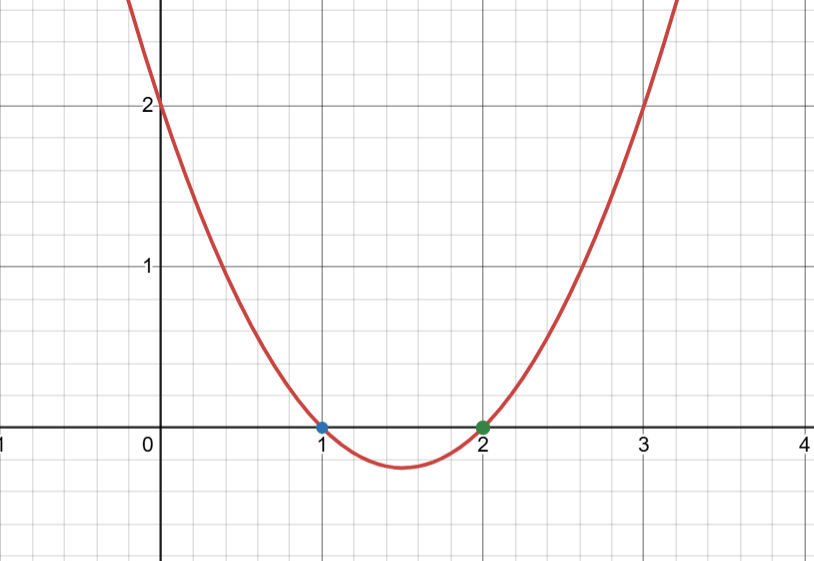

What about odd polynomials that contain only odd powered coefficients?

Consider .

Observe how

In the graph above, observe how, for all ,

Again, this is not just true for , but generalizes to all odd polynomials, i.e. polynomials containing only terms with odd powers of . For example, consider the polynomial

In the graph above, observe that

Visually, the graphs of all odd polynomials are symmetric about the origin, which makes the above equality true for all of them.

Now you can see that after evaluating certain points, we can get the evaluation at other points without any extra calculation. For instance, in the examples above, for even and odd polynomials, knowing the evaluation for also gives us the evaluation for .

We can exploit this fact to make polynomial multiplication faster, which is exactly what a beautiful algorithm called the Number Theoretic Transform (NTT) allows us to do. NTT enables evaluation and interpolation in by recursively using properties of symmetries of certain points, thereby making the conversion sub-quadratic.

But since NTT operates over a finite field, there are no negative values of we can work with. This is where the concepts of multiplicative subgroups, cyclicity and roots of unity come into the picture. These concepts will allow us to exploit the symmetries present in finite fields to perform polynomial multiplication more efficiently. We’ll explore how NTT works in detail in upcoming articles.

Appendix

Uniqueness of lowest degree polynomial proof

We show that if there are two polynomials and of equal degree which interpolate a set of points, then a polynomial must exist such that .

We will then show that the only possible solution for is , otherwise we end up with a polynomial that has more roots than its degree, which we show is impossible. Let us look at these steps in detail now.

Let us assume that the lowest degree Lagrange polynomial is not unique. Then there are at least two distinct polynomials of lowest degree that pass through all given points. Let these two polynomials be and . Now, define the polynomial as the difference between and .

Now, if we show that is for all values of , then we will have shown that equals , and therefore the Lagrange polynomial is unique.

Since the degree of both and is at most , it follows from simple algebraic subtraction that must also have degree at most .

Also, since both and pass through the same points, they will evaluate to the same -value for each of the -values.

Note: Graphically, when two different polynomials evaluate to the same -value for a given , it means that they intersect at that point. For example:

At , both give , so they intersect at the point . In this case, we’re dealing with different polynomials. Another possibility for having the same for a given is that they are actually the same polynomial! In that case, they will have the same for all values of , not just for some particular values of .

In the case of and , they are equal for at least n + 1 different values of . This can be mathematically expressed as:

So, the difference between and at all points will be zero. That is,

Therefore, evaluates to zero at points, which implies that it is a zero polynomial. Let us see more clearly why.

A zero polynomial is one that evaluates to zero for all values of . The simplest example of a zero polynomial is:

Another way of looking at this is, if our domain of polynomial evaluation - the set of points at which the polynomial can be evaluated - is, say, , then a zero polynomial for this domain can be:

Because,

We could have many more zero polynomials for the domain such as:

Notice that each of and evaluates to zero for the domain , and thus is a zero polynomial. We can have many more. The most primitive one being , i.e. the constant zero itself.

Note: If the domain is taken as the set of all real numbers, then the only zero polynomial we can have is , since no other polynomial will evaluate to zero for all real numbers.

Now observe the number of roots and degree for each of the example zero polynomials we looked at.

Roots of a polynomial are the values in the domain for which the polynomial evaluates to zero, whereas the degree of a polynomial is the highest power of the variable as you well know.

- Roots- , Degree-

- Roots- , Degree-

- Roots- , Degree-

All of them evaluate to zero on ; therefore, the number of roots is , whereas the degree can be varied by modifying , whose degree is .

Also note that has the number of roots greater than its degree. This is only possible in the case of a zero polynomial, specifically the primitive one . Otherwise, the number of roots is always less than or equal to the degree.

Consider a non-zero polynomial of degree ; it can have at most roots (or intersections with the -axis). The primitive zero polynomial is the only exception, as it has more roots than its degree.

For example,

a quadratic equation of degree , such as , has two roots, i.e. and .

For a quadratic equation to have more than two roots, it must be equal to zero, i.e. .

Now, coming back to our argument: since evaluates to zero at points, it must have at least roots, which is greater than its degree . Therefore must be equal to zero.

This implies that , meaning they are the same polynomial. Therefore, the polynomial of lowest degree that interpolates a set of distinct points is unique.